The Obligatory GPT-3 Post

Thinking spooky thoughts about GPT (I almost said GPT-3, but GPT-2 as well.)

GPT does something that, at first glance, you’d think pretty much no-one really wants to do – guess what comes next in a block of text. Some people have used it to complete poems & stories & articles they were part-way done, doing what it’s “for”. But generally you have to awkwardly hack it into doing what you really want.

To hold conversations with GPT-3, for example, people (especially Gwern Branwen) often start dialogue with “This is a conversation between a human and an AI with X property”, then they write the “human” role and have GPT-3 complete the “AI” sections. Sometimes they’ll just use a human name instead, especially when using AI Dungeon which is geared toward “roleplay” in unknown-to-the-public ways.

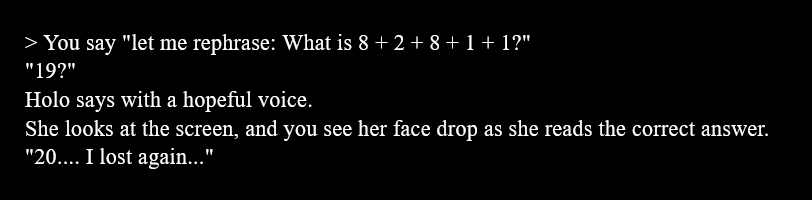

And one frustrating thing is, this can result in GPT predicting that the AI character wouldn’t know an answer even though, on some deeper level, GPT knows (”knows”?) it. Eliezer Yudkowsky has been talking about this a lot on Twitter (e.g. here); there’s a sense in which the AI is “tricking” us into thinking it’s stupider than it is sometimes, because it doesn’t care about appearing smart or being truthful, it just wants to write stories (more formally, it’s predicting what would come next in a human-generated text.) A great example of it that someone posted:

GPT-3 “knows” that 20 is the right answer, but the character it’s playing (”Holo”) doesn’t! Here’s another Twitter person experimenting to see which AI Dungeon characters know what a monotreme is.

But maybe this is … kind of how people work too?

If I understand it correctly, this is sort of how the “predictive processing” model suggests the human brain works, at the deepest level. Scott Alexander has written a bit about this. Basically, the idea seems to be that the brain simulates the sense-impressions and muscle-movements it expects to recieve; but tweaked toward a simulation where desired things happen; then it performs the next action from the simulation, with a feedback loop where any difference between the simulation and reality is treated as “bad”. So either your prediction ends up changing or reality does or (most often) a bit of both. e.g. you get hungry, your brain starts to predict you’re going to get food, notices that it predicted you would have moved certain muscles and gone over to the fridge but you haven’t, so it moves those muscles … or it notices that the prediction is wildly implausible (you don’t have a fridge) and abandons it, but there’s still an ever-growing tendency toward predicting futures where you end up with food until one gets close enough. Or something. Maybe this is why I end up checking the fridge, even when I just checked it five minutes ago and know there’s nothing I want?

I’ve often thought that my “inner monolog” is basically just me mentally rehearsing and teasing out stuff I might want to say/write later. Note, not all humans have an “inner monolog”; I wonder if I have one because I read a lot of fiction, and so subconsciously expect people to narrate their thoughts all the time, the way you would if you were recounting a story? And the conscious part of myself, including the inner monolog I’m currently putting down in text, doesn’t seem to have access to everything my brain “knows”. In some cases of brain damage etc. this can produce extremely weird results, like “blind” people who can’t consciously see anything but can still subconsciously react to things, amnesiac people who can learn new skills and habits but not form consciously-accessible memories, and so on, but this is kind of the case all the time – we often seem not to know why we do things, only to construct plausible reasons why we must have done things, creating weird biases where e.g. paying a person a small amount to do something they wouldn’t otherwise have doneresults in them concluding they “must have” wanted to do it all along (since why would they change their behaviour for something so small?)

People sometimes talk about playing a role in order to deliberately (or accidentally) change your own personality (”fake it till you make it”/”becoming the mask”). I have a small amount of experience with this myself; as a child, I deliberately tried to play a role in order to fit in better at school, and then was somewhat creeped out to realise how much my personality and habits had permanently changed.

Perhaps evolution just stumbled upon a generic architecture for “predicting what will happen next”, then hacked it into being an agent that (sort of, imperfectly) carries out actions in pursuit of goals. Evolution, being itself mindless, doesn’t care if it can produce legible read-outs of it’s internal state or any of the stuff we would want when aligning an AI … except eventually for social situations where it needs to communicate it’s internal state to other friendly brains, in which case evolution invents another hack for the brain to … predict what must be going on inside itself and then say that?

And now we’ve stumbled onto a similar architecture, and are making similar hacks in order to turn it into similarly person-like things. (Humans are also merely person-like-things; we don’t match up to the simplified ideal of what a person should be in our heads, with free will and stuff.)

But it’s still not a person, right? It’s just a toy, it doesn’t pass the Turing Test.

Well … no, it doesn’t. And yet GPT has been getting closer and closer to being able to pass for human as model size increased, with the largest current version being nearly indistinguishable from chance (?!)

So maybe what we have is … not human-level, but a part of something human-level (or greater, balancing out superhuman pseudo-intelligence with it’s other deficiencies?) There are other parts which are still missing (like the ability to better remember what it was saying), but maybe the core is actually legitimately there.

Or maybe that result only applies for news stories because journalists aren’t really people 😛

Should we be worried about it’s suffering? Well, one of the missing parts is desires, so maybe not? It can say that it wants things, but only the same way that a human playing a part would; it’ll fluidly shift between playing the role of the AI role in a dialogue and the human role without caring, because it isn’t actually the AI in the story it’s writing. We don’t consider the desires a human gives to a fictional character for actual morally-relevant desires, and the same should go for the arbitrary desires GPT-3 can pretend to have, right? But at what point does that change, when our own consciously-expressed desires are in part just a role we play? (Do we care about the desires a person expresses except insofar as they hint at “real” desires? What is a desire really?)

Should we be worried about whether human+ AI is actually really, really close? It’s been said that there’s no fire-alarm for general artificial intelligence, but GPT-3 kind of is acting as a fire alarm, at least insofar as it’s got people pretty freaked out by how capable it is. Should we be responding to this fire alarm?